“Alexa, what am I doing tomorrow?”

Tinder, jumping in: There’s someone down the street you might be attracted to. She’s also attracted to you. You both like the same band, and it’s playing — would you like me to buy you tickets?

If Tinder’s CEO is to be believed, that’s love in five years. Looks like artificial intelligence (AI) will soon have our future by the balls — quite literally.

Increasingly, algorithms are getting better at anticipating our thoughts. The eerily targeted ads you see on Facebook are just the beginning of a more comprehensive precognition about to invade our lives. One day soon, AI will get so good that it will know our future choices better than we ourselves do.

I can hear your scepticism. I am a complex individual driven by free will and can’t be predicted, you say.

As it turns out, our free will is rather predictable. In this week’s Factor Future, we’ll explore how smart machines are getting a step ahead of us.

The not-so-free will

Free will or hard determinism? It’s probably one of humanity’s most fractured and long-running debates.

While a satisfactory answer to this will remain elusive, even the most aggressive supporters of free will have had to concede that it operates within certain constraints, starting with the genetic lottery that determines a significant portion of our physical and mental attributes to the huge role of nurture.

With every passing minute, our interactions with the world continue to shape our view of it and provide us with a pattern we use to arrive at our choices. This is especially true in domains where we are collectively behaving as a group within certain rules specific to the arena, like investing in stock markets.

The world’s largest hedge fund recently decided to get rid of all its managers and instead use AI and a team of programming professionals. Robot-only hedge funds, like Sentient and Emma AI, are using machine learning to simulate human traders and use data outside the realm of markets to predict market movement.

As it turns out, it isn’t impossible to make predictions even where our collective choices are driven by intuition (and little objective data) — like predicting which movie is going to the win the Oscars, for instance. Unanimous AI leverages the collective group intuition of many people (called a “swarm”) to guide its AI platform and arrive at predictions. When predicting the 2016 Oscars, it discovered that the accuracy of the “swarm” was at 76% although the individual accuracy rate was down at 44%.

But, the true challenge from AI to the idea of free will is when it can make incredible personal predictions at an individual level or in groups small enough to be unique.

A future of personal precognition

Our behaviour is significantly influenced by the events and context around us, which can be deduced using the words we use and the content we share. Today, AI is beginning to make micro-predictions about us thanks to the large amount of personal data being readily available, exponentially increasing computing power and progress made on some long-held notions around building deep learning neural nets.

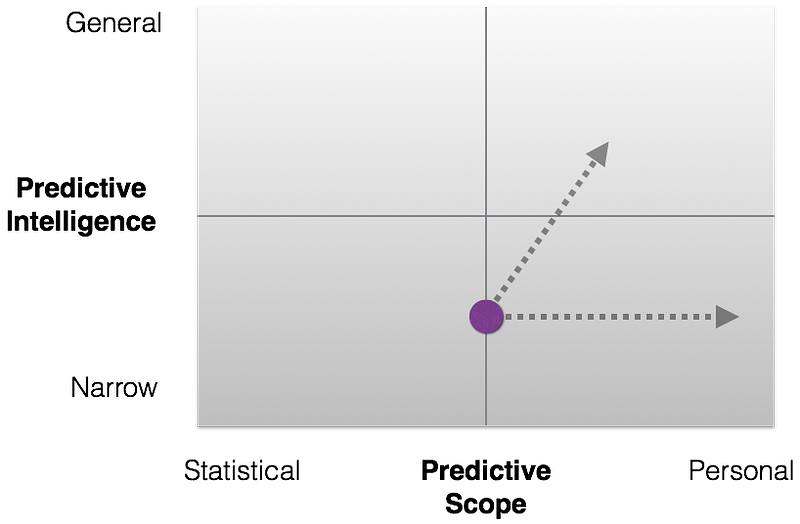

It’s still early days, though. The true holy grail for marketers and retailers is to be able to move from making statistical predictions (for groups of people) to personal predictions (for individuals). Similarly, another challenge is moving from domain-specific insights to cross-domain predictions that can leverage data to make more “general” predictions across a range of events.

Generalised predictions are harder to crack as they require a move towards artificial general intelligence. However, within specific domains, predictions are increasingly getting personalised. Today, we leave little bits of our digital footprint everywhere, from where we shop, where we work, what we search, who our friends are, our schedules, what we eat, to how much money we have in our accounts.

This has enabled companies to make targeted individual-based predictions of our interests and needs. Here are some examples:

- We’ve all experienced targeted ads by Google and Facebook or that spooky suggestion on social media to connect with someone we’ve probably been thinking about.

- Retailers are making highly personalised targeted predictions that go beyond simple recommendations, like offering deals on gifting options for the upcoming birthday of a close family member. A few years ago, Amazon patented predictive shipping — a concept wherein the e-tailer anticipates potential customer orders and ships them to customers’ homes (or close to that) even before the order is placed.

- In the workplace, AI is already predicting if an employee is going to quit. Predictive AI using highly personalised information is likely to make the human resources department power-users of AI-based tools as they seek to influence employees and keep them happy.

- In healthcare, AI is increasingly becoming capable of predicting diseases — even mental health illnesses — even before the onset of obvious symptoms by using a combination of non-medical personal data, genetics and diagnostics input. Machine learning could drastically increase our ability to predict suicides with more than 90% accuracy.

Yet, the true power of predictive AI to the level that it becomes magically delightful (and creepy) is still waiting around the corner and is likely going to be propelled by the following trends:

- Increasing ability of computers to interpret various inputs including greater perception of natural language, image recognition, voice synthesis and combining all of these to make wholistic “sense” of a user or environment.

- The rise of virtual personal assistants (VPAs like Siri or Alexa) with conversational interfaces and wearables. VPAs will soon proliferate in our homes, work, cars and other places. This enables collection of personal information (that was earlier hidden) but they also need to be aggregated to make more holistic predictions.

- The consolidation of majority of the data into a small set of platforms, owned by powerful companies and governments, allowing them to make complex predictions.

The final trend is particularly concerning as it puts an enormous amount of power in the hands of the wealthy and already powerful.

1984 redux: The government-technological complex

Tim-Bernes-Lee, in his open letter on the 28th anniversary of the Web (on March 12), wrote that “losing control of personal data” to big corporations was one the big dangers facing the Web today. In the same letter, he also called out the increasing danger of surveillance from governments.

Today, a gang of five companies pretty much rule consumer and business data. Together, Google, Facebook, Amazon, Apple and Microsoft control nearly every byte of information we share on the internet. Owning large parts of the data on the internet provides this “gang” an incredible advantage and, predictably, these companies — besides IBM — are investing millions in AI and machine learning advances.

As the predictive power of AI increases, it raises an important question: Can we allow a few rich and powerful people or organisations control and access to nearly all of the world’s data? Cambridge Analytica, the company which helped sway voters towards Donald Trump in the US presidential election, was initially funded by a billionaire who believed that he wanted to provide voice to right wing media.

Then, there are the governments.

In India, the Unique ID Authority of India, with surprisingly limited accountability, has become the custodian of the personal data of more than a billion Indians thanks to the Aadhaar program.

The list of data that the government intends to collect using Aadhaar keeps increasing — ranging from tracking withdrawal of one’s pension money to tickets for a cricket match. Even children aren’t spared. From monitoring the midday meal scheme to tracking performance and providing scholarships, the net has been cast wide.

While the more obvious concerns revolve around safety and privacy of the data, AI and machine learning raise the spectre of an Orweillian state-controlled existence. By providing complete visibility and control, are we opening up a state where dissenters would be proactively penalised. Could the government predict potential competitors? Or would this lead to preventing crime control a la Minority Report?

Once you have the prediction down pat, the big brothers can move on to mass manipulation.

Influence — the final nail in our destiny

Until now, we’ve been talking about tweaking algorithms to guess what we are up to. But what about tweaking people themselves? Eventually, we are susceptible to being manipulated and influenced to follow certain paths.

Our choices are often made based on how they are framed and what context is presented to us before we make the choice, says behavioural economist Dan Ariely. Google’s design ethicist says UI / UX influences hijack our minds by exploiting gaps in our perception.

Today, by controlling our access to data, opinions and information, a handful of platforms influence us by repeated refinements and exposure to a narrowing bubble of opinions. AI is beginning to influence “outliers” by bringing them towards popular opinions. But, because popular opinions can be designed to be malicious, sometimes they end up mirroring our worst collective prejudices.

Psychological operations (psyops) — a term used by the military to refer to the act of influencing large groups of people using psychological tools — is now pervading our lives.

Most recently, it got Donald Trump elected. Cambridge Analytica, which specialises in big-data based mass manipulation, created psychological profiles of millions of individual voters using nearly 5,000 separate data points in order to influence them with relevant micro-ads and content. Facebook is now a potent political and geo-strategic weapon.

Tomorrow, our computing interfaces will get more natural — like voice and immersive VR — and we’ll trust them more. Equally important will be the ability of AI to micro-personalise it’s approach.

Soon, AI will be able to personalise the style, tone and sophistication of language with which it communicates with us, to gain our trust. The information it presents will be so much in line with our thoughts, moods and beliefs that we will have no option but to trust it and be influenced.

Today, we have no control over the fragments of our personal data that lie across the Web. There is also a lack of transparency on how the information is being used to make predictions about our life. When we see a recommendation or a targeted ad, we aren’t made aware of what personal data has been used to arrive at these choices. Tomorrow, these predictions could determine our health insurance or college admission process.

We have an urgent need to take control of our information and demand greater transparency. If we don’t, we may end up in a totalitarian world where our destinies are predestined and shaped by machine intelligence resting in the hands of a rich and powerful few.

Also read:

Biased bots: Artificial Intelligence will mirror human prejudices

More from the FactorFuture series

Subscribe to FactorDaily

Our daily brief keeps thousands of readers ahead of the curve. More signals, less noise.

To get more stories like this on email, click here and subscribe to our daily brief.

Lead visual: Angela Anthony Pereira