“Whether we are based on carbon or on silicon makes no fundamental difference; we should each be treated with appropriate respect.”

― Arthur C. Clarke, 2010: Odyssey Two

Artificial intelligence will be the last invention humans will ever have to make, believes Oxford researcher Nick Bostrom. When AI reaches Singularity (a point where AI surpasses human intelligence), technological progress will be so quick that to the human mind all progress that remains to be made could happen in moments. In building this new world, humanity could end up crafting its own demise, fears Bostrom.

It’s an idea that has grabbed the attention of minds like Stephen Hawking, Elon Musk and Bill Gates. With these powerful voices joining in, a narrative has emerged around AI ‘controls’ and ‘structures’ to balance the full-steam race of technology behemoths towards perfecting code-intelligence.

Yet, is the eventual arrival of singularity the only thing to be afraid of when creating artificial intelligence? What about the intervening years as AI becomes more integrated into our lives? How will it be influenced by us and influence us? What will be the impact on morality, culture and society as a whole?

AI as a mirror to human prejudices

Imagine a future, where hailing an on-demand self-driving car is difficult for you because the platform had deep-learned (based on 20-odd attributes) that your profile matches that of a typical high-cancellation customer. Now, imagine if one of these attributes is your ethnicity or economic status and it changes the entire equation. Even worse, extrapolate these biases to insurance, health care or social benefits and you have a dangerous new world lurking around the corner.

In this world, a sufficiently intelligent Facebook algorithm could determine that in order to reduce potential conflict, it need not show certain types of content in your feed? What if that filter was based on your race, for instance?

It would be naïve to believe that these scenarios are far-fetched. Why, some of these scenarios are playing out already.

Microsoft’s cute twitter-bot turned into a racist hate-monger in less than 24 hours. “It is as much a social and cultural experiment, as it is technical,” said a red-faced Microsoft when they pulled it down. Isn’t that the absolute truth about AI?

Why didn’t we all just go into a tizzy after seeing “PV Sindhu Caste” as a Google recommendation for search? Ignoring whether it deserved the resulting outrage or not, the question that needs to be asked is this: how many clicked the term after Google started recommending the search term?

Soft-AI (or Weak-AI, i.e. AI targeted at narrow needs) is getting seriously good, seriously fast. Soon it is likely to be part of nearly all aspects of our life. Is it learning from our tribal prejudices and reinforcing it back to us? More importantly, how do we go about preventing this?

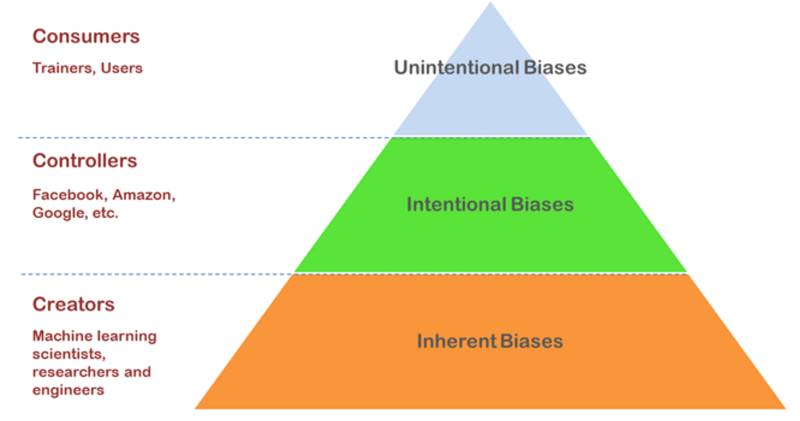

In order to understand the complexity of the problem (and think of solutions), it is important to understand the key human influencers at various stages in the journey of any AI tool or platform:

- The Creators

- The Controllers and

- The Consumers

Creators: Inherent Biases

Cortana. Siri. Alexa. What’s the common thread that ties them together besides the fact that they are all AI-based voice assistants? These futuristic AI-based assistants are all women. Google Now doesn’t have a woman’s name but it speaks in a woman’s voice by default.

There are many reasons given as to why this is so. The dudes building them don’t see a personality beyond a helpful, friendly woman. The clutch of early adopters may also likely echo these views. In essence, it is a perfect alignment of short-term purpose and our inherent biases.

Perhaps, a patriarchal society, used to seeing women in servile roles will continue to perpetrate it through AI personalities. Mad Men attitudes may continue to live inside The Matrix.

These implicit biases play out in various other forms too. Functional robots are mostly modelled as males and in those rare occasions that a female robot gets built, it is often designed to reflect the sexualized, subservient image of women often embodied in a single role – sexy assistants.

Beyond sexism lie other dangers that are subtle enough to not warrant attention. Whole cultures, ethnicities and groups are going unrepresented in our AI revolution. As the western world continues to play a leading role in building the framework for AI, its culture and idiosyncrasies get embedded deeper. Most AI platforms are being trained on a bed of English language semantics, for instance.

Unlike other technologies or machines, AI represents a fundamental encoding of human understanding that will drive our future. Today, it is being built to be compatible with a very small-subsection of the world.

The danger is that the rest of the world may have to adapt to this narrow definition. And when that happens, will the thousands of other cultures, languages and social norms begin to fade away? Will AI become a subtle socio-cultural colonization?

Controllers: Intentional biases

“I don’t know a lot of people who love the idea of living under a despot,” said Elon Musk referring to a few large companies controlling AI development. A handful of companies have been gobbling up AI-startups in the last five years. An even smaller handful touch nearly all data being passed around the Internet.

Facebook ran a socio-psychological experiment sometime back where they manipulated the emotions of their users by controlling what appears on the news-feed. It is disturbing to imagine that a company that’s built on advertising can toy with its consumers’ emotions as per need. Imagine a young, impressionable teenage girl being constantly bombarded with content that reinforces the importance of physical beauty according to narrow definitions. Then throw in the adverts for beauty products and services and bam!

Even more dangerous, is the potential for reinforcement of racial and ethnic stereotypes because it aligns with a platform’s view of the world or it just helps sell more products. It’s easy to ‘weigh’ algorithms to go in certain directions and still appear unbiased.

This is not to say that the platforms today are biased or seeking to be so. At the same time, it is important to recognize the power that they hold in being able to influence and impact as they seek profits and growth.

Consumers: Unpredictable biases

Whatever mechanics AI is engineered from, there is one important element that is core to its intelligence – learning from world’s evolving data. Microsoft Tay’s rapid degeneration is a perfect example of all the bad things an AI system can learn in the real world.

A 2012 research indicated that AI in the then current form had the IQ of a four-year-old. Imagine letting a four-year-old interact with the internet at large. It would require rather heavy doses of denial and optimism to hope that it’d end up learning the right thing.

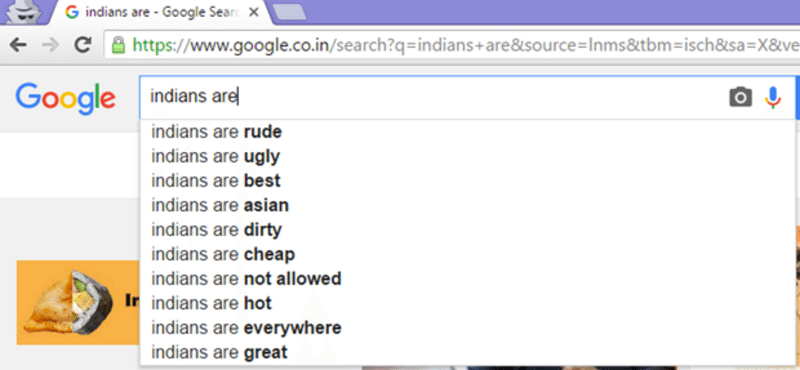

Google’s search auto-prompt is an excellent example of a well-meaning machine learning tool that can get biased. Most of us have experienced the ugly, stereotypical search prompts that pop-up when we are searching for something. For example just typing “Indians are” produced the following suggested search phrases.

The real danger here is that we are also being trained to trust the incorruptibility of algorithms powering our machines. We look at complex, learning algorithms and assume that they’ve been designed in a pure, unbiased manner (even when the creators are motivated by personal economic success). When this implicit trust begins to extend towards the content and view-points it disseminates, it creates a dangerous feedback loop.

FactorDaily published the second part of this series here.

Also read

Computing pioneer Alan Kay on AI, Apple and future

Everybody’s a lover, everybody’s an innovator, and everything is AI: Rohan Murty

Subscribe to FactorDaily

Our daily brief keeps thousands of readers ahead of the curve. More signals, less noise.